Edge AI is transforming how machine learning operates by shifting from cloud-based processing to real-time inference on local devices, enabling faster responses, improved data privacy, and lower costs across industries. This blog explores how Edge AI and IoT are reshaping real-time computing, the advantages of on-device AI for security and efficiency, and the role of specialized hardware in optimizing machine learning models.

On this page

The Shift to Edge AI

The Role of IoT in Enabling Edge AI

Ethical Considerations

Into the Weeds

iPhones and Local Large Language Models

Choosing a Software Agency That’s Right for You

The Future of Edge AI

About the Author

At Valere, we’re always looking for the latest trends in Machine Learning and Artificial Intelligence. If Moore’s Law means anything, NVIDIA’s stock will keep surging as chips get even smaller. And small chips means that small devices, networked together in the so-called Internet of Things (IoT), will be able to run models that just a few years ago were unthinkably computationally expensive.

In recent years, a quiet revolution has been taking place in the world of artificial intelligence (AI) and machine learning. While cloud-based solutions have dominated the landscape for years, a new wave of AI is emerging, one that operates at the edge. Edge AI, powered by new hardware innovations, is transforming how we think about machine learning. By shifting processing from distant cloud servers to local devices, Edge AI offers many benefits, including reduced latency, improved data security, and lower costs. Edge AI is reshaping the future of machine learning, enabling both IoT devices and powerful hand-held computers like the iPhone to run large language models locally. Industries sensitive to real-time data latency (defense, aerospace) or subject to data privacy or security regulation that prohibit the use of cloud technologies should pay particular attention. We’re here to help.

Historically, machine learning models were developed, trained, and deployed on powerful cloud servers. This model relied on heavy computational resources and large-scale data centers to perform tasks like image recognition, natural language processing, and decision-making. While cloud-based AI has proven effective, it also presents significant challenges. Latency—the time it takes to send data to the cloud and receive results—can be problematic in real-time applications. Additionally, sending sensitive data to remote servers raises concerns around privacy and data security, especially in industries like healthcare, finance, and personal devices.

This is where Edge AI comes in. Edge AI refers to running machine learning algorithms directly on local devices, at the "edge" of the network, rather than sending data to a centralized server. The proliferation of connected devices, known collectively as the Internet of Things (IoT), combined with advancements in hardware like specialized AI chips, has made it possible to perform powerful ML tasks directly on smaller, less resource-intensive devices.

Devices such as smartphones, smart cameras, wearables, and even industrial machinery can now process data and make decisions in real time, without relying on distant cloud servers. The result is faster, more efficient AI with less dependence on internet connectivity and centralized computing resources. With Edge AI, machine learning is no longer confined to the cloud—it’s happening right where the data is generated.

The rise of Edge AI is closely tied to the rapid growth of IoT devices. The IoT encompasses a wide range of interconnected devices that collect and share data with minimal human intervention. These devices include everything from smart thermostats and security cameras to industrial sensors and autonomous vehicles. The key to IoT’s success lies in the ability of these devices to gather vast amounts of data in real time and, increasingly, process that data locally.

IoT devices traditionally had limited processing capabilities. However, recent advances in hardware, particularly in specialized chips designed for AI tasks, have transformed what these devices can do. For instance, companies like Qualcomm and NVIDIA have developed AI accelerators and edge computing platforms that allow IoT devices to perform complex machine learning tasks without relying on cloud infrastructure.

Take, for example, a smart security camera. In the past, the camera would capture video and send it to the cloud for processing, where an AI model would analyze the footage for faces or unusual activity. Today, many modern security cameras are equipped with powerful AI chips that can perform real-time image recognition and alert users immediately, without ever sending the data to the cloud. This local processing not only reduces latency but also ensures that sensitive footage remains private and is not exposed to third-party servers.

Edge AI is also helping in fields like healthcare, where wearables and medical devices are capable of monitoring patients' health metrics and making decisions autonomously. Instead of sending data to the cloud for analysis, these devices can analyze the data locally, providing immediate feedback to users and healthcare professionals. In critical situations, such as monitoring heart rate or blood oxygen levels, this speed is crucial.

Apart from the technical advantages of edge ML models, the big question is how you feel about who owns or has access to your data, and whether your technical team has implemented the right security at the appropriate stage of the information flow. If you’re allergic to the way that big tech has bulldozed its way through a serious consideration of intellectual property or have particular reasons why you can’t share your data (as I am), then do all the processing as far upstream as possible. However, be aware that you will also not be contributing back to the general advancement of the models that will continue to compete for larger, more complicated AI or ML tasks. Also, just because your data only lives on your phone or IoT device doesn’t mean that it can’t be stolen, hacked, or otherwise appropriated, so be careful both to secure your devices as well as pay attention to any terms of service that may apply.

NPUs (Neural Processing Units) and TPUs (Tensor Processing Units) are specialized hardware accelerators designed to speed up machine learning tasks, but they have different architectures, purposes, and use cases, especially in the context of edge computing. Both are critical when optimizing for response time on edge devices.

NPUs are designed to accelerate neural network computations in general. They are optimized for a broad range of machine learning tasks, including deep learning models, and are intended to be highly versatile. When real-time inference is critical for more general purposes models, NPUs are a good choice. TPUs, developed by Google, are highly optimized for tensor processing, specifically to accelerate matrix operations used in deep learning, particularly for training and inference of TensorFlow models.

On the software framework side, two commonly used frameworks are TensorFlow Lite (TFLite) and PyTorch Mobile.

TFLite is the lightweight version of TensorFlow, designed specifically for mobile and embedded devices. It enables on-device inference with a focus on performance, efficiency, and low latency.

Key Features:

Workflow for Using TensorFlow Lite:

Edge Device Inference: The model performs inference on the edge device, allowing real-time processing of data without needing a cloud connection.

Example Use Cases:

Sensor Data Processing: Edge devices analyzing sensor data in IoT applications (e.g., smart home systems, industrial monitoring).

Advantages of TensorFlow Lite:

PyTorch Mobile is a framework within the PyTorch ecosystem that enables the deployment of machine learning models on mobile and embedded devices. Like TensorFlow Lite, PyTorch Mobile is designed to make deep learning models run efficiently on edge devices while maintaining the flexibility of PyTorch.

Key Features:

Workflow for Using PyTorch Mobile:

Example Use Cases:

One of the most exciting developments in Edge AI is the ability to run large language models (LLMs) directly on devices like the iPhone. Until recently, running LLMs, such as GPT-style models, required vast computational resources and was only feasible in the cloud. These models, with their billions of parameters, require immense processing power, typically provided by large-scale cloud data centers.

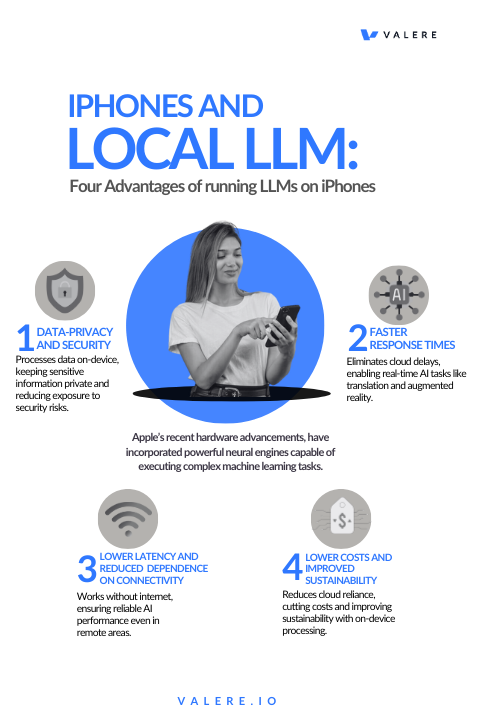

However, Apple’s recent hardware advancements, particularly the development of the M1 and M2 chips, have changed the game. These custom-designed processors incorporate powerful neural engines capable of executing complex machine learning tasks. With these chips, it’s now possible for an iPhone to run sophisticated AI models locally, including LLMs.

Running LLMs on the device offers several distinct advantages:

Perhaps the most significant benefit of running LLMs on local devices is enhanced data security and privacy. By processing user queries and interactions directly on the device, sensitive information never leaves the user’s phone. This is especially important in an era where concerns about data breaches and surveillance are at an all-time high.

For example, consider an iPhone running a voice assistant. Instead of sending voice data to Apple’s cloud servers for processing, the iPhone can process the audio locally, ensuring that private conversations stay private. Similarly, if a user is working with sensitive documents or health-related queries, the device can process and analyze that data without sending it to the cloud. This minimizes the risk of exposing personal information to third-party servers.

Edge AI allows for faster response times by eliminating the need to send data over the internet to a distant server. This is particularly noticeable in applications like real-time language translation, augmented reality (AR), and on-device search. In the case of LLMs, this means that when a user asks a question or provides a prompt, the iPhone can process the request and generate a response almost instantaneously—without waiting for cloud-based processing.

The immediate response not only improves user experience but is also crucial for real-time applications in industries such as autonomous driving, healthcare, and robotics, where delay can have serious consequences.

Running LLMs locally on devices like the iPhone also reduces the dependence on a stable internet connection. In regions with poor or no connectivity, Edge AI ensures that users can still access powerful AI capabilities. This is particularly important for users in rural areas or when traveling, where cloud-based services might be inaccessible due to bandwidth limitations or network outages.

Moreover, local processing ensures that AI models can continue to work even in scenarios where there is intermittent or low bandwidth. This reliability makes Edge AI especially valuable for applications in remote or critical environments.

By offloading processing to local devices, companies can reduce their reliance on cloud infrastructure, cutting down on server costs and energy consumption. From a sustainability standpoint, Edge AI is more energy-efficient because it reduces the need for large data centers running 24/7. Additionally, fewer resources are required to transmit large volumes of data back and forth between local devices and the cloud.

At Valere, our distributed, international teams each specialize in different types of development. Before deciding on an agency, first think about your company’s use case. In general, the ease of development with industry standard APIs argues for a traditional software model, and Edge AI is not for everyone due to increased costs and a certain amount of fixed overhead. However, Valere’s powerful Discovery process lets us help you look under the hood and decide whether your AI-driven transformation requires specialized workflows that might include working directly at the device level. Our flexible, customer-centric approach is what distinguishes us from the rest of the pack.

Edge AI represents a paradigm shift in the world of machine learning, enabling decentralized, real-time AI applications that are faster, more secure, and more efficient than traditional cloud-based solutions. As IoT devices continue to proliferate and hardware advancements make local processing more powerful, we can expect Edge AI to play an increasingly significant role in a variety of industries.

For consumers, devices like the iPhone are already demonstrating the potential of Edge AI, running advanced models like LLMs directly on the device, offering privacy, security, and lightning-fast responses. For businesses, Edge AI offers the opportunity to improve operational efficiency, reduce latency, and unlock new capabilities that were previously out of reach.

Ultimately, as the Internet of Things continues to expand and the power of Edge AI grows, the possibilities are limitless. Edge AI is not just the future, it’s happening right now, and companies that embrace this new paradigm will be the ones that win out.

Hi, I'm Guy Pistone, CEO & Co-Founder of Valere, a leading global tech and AI agency. With over a decade of experience building successful applications, I've driven innovation across industries.

My journey began with Fitivity, a sports training platform that I grew to 15 million users through the power of AI. This success led to its acquisition, followed by the creation of Elete, a groundbreaking sports app leveraging AI for performance enhancement, which was also successfully acquired.

At Valere, I lead a team of over 200 employees across five countries, delivering cutting-edge AI solutions for businesses worldwide. My expertise has been recognized through awards like "Top Executive of the Year" and distinctions as an Expert Vetted Developer and AI Consultant on platforms like Upwork.

Beyond my professional endeavors, I'm passionate about investing in the future of AI. As a member of the LaunchPad Angel Group in Boston, I actively support promising ventures in life sciences and biosciences.

Let's connect if you're interested in building meaningful things with AI. Visit us at Valere.io or follow me here, on LinkedIn.

Share