Imagine a world where AI seamlessly integrates into our daily lives, from personalized healthcare to self-driving cars. But with this incredible potential comes a crucial question: how do we ensure AI is developed and used responsibly? The European Union (EU) has long been a leader in shaping legal frameworks for emerging technologies, and AI is no exception. Valere, a team deeply invested in the responsible development of AI, keeps a close eye on these evolving regulations. The EU has taken significant strides in establishing a comprehensive framework, the AI Act, to ensure safe, ethical, and responsible AI development and use. While the United States (US) approach is still evolving, it shares some key goals with the EU. And as we navigate this rapidly changing landscape, understanding these regulations becomes crucial. So, which approach, the EU's AI Act or the US framework, do you think will have a greater global impact?

On this page

The EU's AI Act: A Framework for Trustworthy AI

The US Approach to AI: Principles for Responsible Development

Similarities and Differences: A Comparative Analysis

The Evolving Landscape of AI Regulation

Valere: Your Partner in AI Innovation

The EU's centerpiece for AI regulation is the AI Act, a comprehensive legal framework designed to foster trustworthy AI. Here's a breakdown of its key aspects:

The AI Act outlines different levels of regulation based on risk:

The EU understands the importance of fostering innovation alongside responsible development. They even plan to provide testing environments for startups and small businesses to refine AI models before public release.

For a deeper dive into the EU AI Act, you can refer to this informative article from the European Parliament: European Parliament article EU AI Act: First Regulation on Artificial Intelligence.

While the US doesn't have a single, comprehensive AI regulation like the EU's AI Act, it has outlined eight key principles for responsible development and use of AI. These principles, detailed in the White House's "Fact Sheet: President Biden Issues Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence", aim to:

The US approach is particularly focused on data privacy. It takes a multi-pronged approach, including:

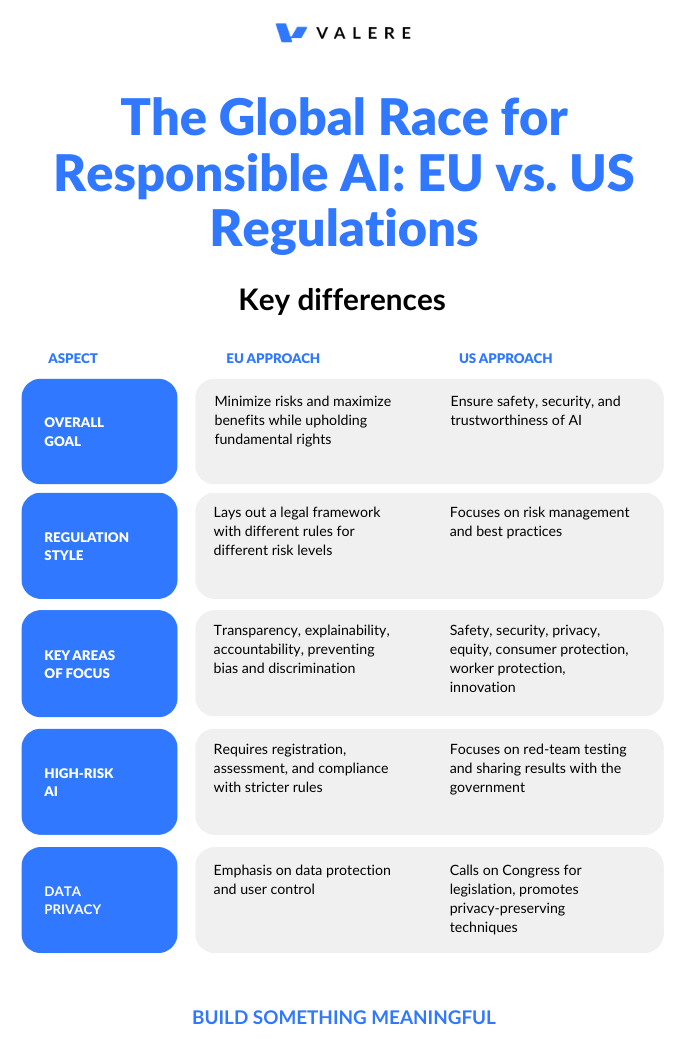

Despite their distinct approaches, both the EU and US share some key goals:

Here's a table summarizing the key differences between the EU's and US's approaches to AI regulation:

The global landscape of AI regulation is a dynamic one. While the EU has established a comprehensive legal framework through the AI Act, the US approach continues to evolve with ongoing discussions and initiatives. This ongoing process highlights a key similarity: both regions demonstrate a strong commitment to fostering responsible AI development through collaboration and improvement.

As you navigate the complexities of AI regulation, several valuable resources can be your guide. The US government's AI.gov webpage provides a centralized hub for AI initiatives. Additionally, the Global Partnership on AI (GPAI) offers a collaborative platform for promoting responsible AI development on a global scale.

The question of which risk poses the greatest challenge for AI regulation remains an ongoing discussion. Here at Valere, we believe the answer is likely multifaceted, encompassing concerns like data privacy, algorithmic bias, and cybersecurity threats.

Valere’s team of AI experts is dedicated to bridging the gap between human factors and these powerful new technologies. Staying up-to-date on the latest regulations and can help you navigate the evolving landscape of AI responsibly.

If you're embarking on your own AI project, Valere can be your ideal partner, guiding you through every step of the process, from navigating regulations to ensuring ethical development and implementation.

Ready to unlock the transformative power of AI for your business? Contact Valere today and let's discuss your AI project.

About the Author

Alex Turgeon is a marshal of Customer Experience with over 10 years of experience designing and launching digital experiences and AI-powered products. Alex has supported numerous commercial, B2B, enterprise, and US federal government clients, empowering the rise of the digital citizen and working with notable organizations such as Digital.gov in presenting "Customer Experience & Continuous Improvement: The USPS.com Digital Approach." Currently, Alex is the Chief Growth Officer of Valere and Founder of Valere Digital, Valere’s US-based office, whose work has yielded millions in revenue, downloads, and funding.

Share